Amplitude Analytics helps generate thorough product analytics of web and mobile application usages to help you make data driven decisions. You can replicate data from your Amplitude account to a database, data warehouse, or file storage system using Hevo Pipelines.

Note: Hevo fetches data from Amplitude Analytics in a zipped folder to perform the data query.

Source Considerations

-

Amplitude Analytics introduces a two-hour delay between receiving data and when that data becomes available for ingestion into Hevo via the Export API.

For example, data sent between 8–9 PM is uploaded to Amplitude Analytics at 9 PM and becomes available for ingestion at 11 PM.

-

Each Export API request in Amplitude Analytics has a 4GB size limit. If the data for a specific time range exceeds this limit, the API request fails, and Hevo cannot ingest any data for that period. This may result in data inconsistencies or Pipeline failures. To avoid such failures, increase the ingestion frequency so that data is ingested in smaller batches.

-

Users on the Growth and Enterprise plans can make up to 500 Behavioral Cohorts Download API requests per month to download cohorts. This API is not available to users on the Starter or Plus plans.

-

The Behavioral Cohorts Download API supports a maximum cohort size of 2 Million users.

Limitations

- Hevo does not load data from a column into the Destination table if its size exceeds 16 MB, and skips the Event if it exceeds 40 MB. If the Event contains a column larger than 16 MB, Hevo attempts to load the Event after dropping that column’s data. However, if the Event size still exceeds 40 MB, then the Event is also dropped. As a result, you may see discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each Event contains less than 40 MB of data.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Nov-07-2025 | NA | Updated the document as per the latest Hevo UI. |

| Sep-18-2025 | NA | Updated section, Configuring Amplitude Analytics as a Source as per the latest UI. |

| Sep-15-2025 | NA | Added section, Source Considerations. |

| Jul-07-2025 | NA | Updated the Limitations section to inform about the max record and column size in an Event. |

| Jan-07-2025 | NA | Updated the Limitations section to add information on Event size. |

| Nov-05-2024 | NA | Updated section, Retrieving the Amplitude API Key and Secret as per the latest Amplitude Analytics UI. |

| Mar-05-2024 | 2.21 | Updated the ingestion frequency table in the Data Replication section. |

| Apr-04-2023 | NA | Updated section, Configuring Amplitude Analytics as a Source to update the information about historical sync duration. |

| Jun-21-2022 | 1.91 | - Modified section, Configuring Amplitude Analytics as a Source to reflect the latest UI changes. - Updated the Pipeline frequency information in the Data Replication section. |

| Mar-07-2022 | 1.83 | Updated the introduction paragraph and the section,Data Replication, about automatic adjustment of ingestion duration. |

| Oct-25-2021 | NA | Added the Pipeline frequency information in the Data Replication section. |

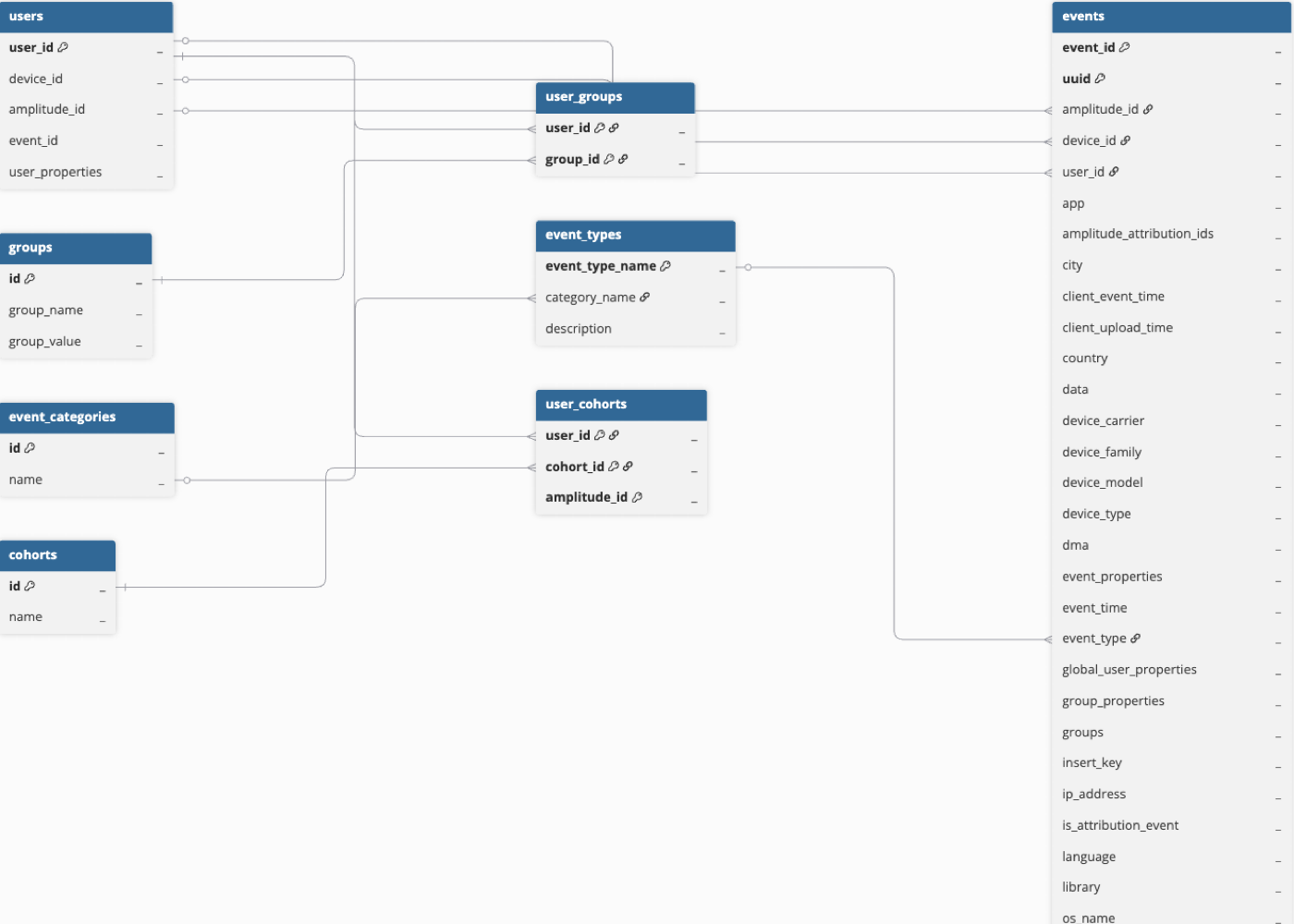

| Apr-06-2021 | 1.60 | - Added a note to the section Schema and Primary Keys - Updated the ERD. The User object now has three fields, user_id, amplitude_id and device_id as primary keys. The field uuid in the Event object is also a primary key now. |