Snowflake offers a cloud-based data storage and analytics service, generally termed as data warehouse-as-a-service. Companies can use it to store and analyze data using cloud-based hardware and software.

Snowflake automatically provides you with one data warehouse when you create an account. Further, each data warehouse can have one or more databases, although this is not mandatory.

The data from your Pipeline is staged in Hevo’s S3 bucket before finally being loaded to your Snowflake warehouse.

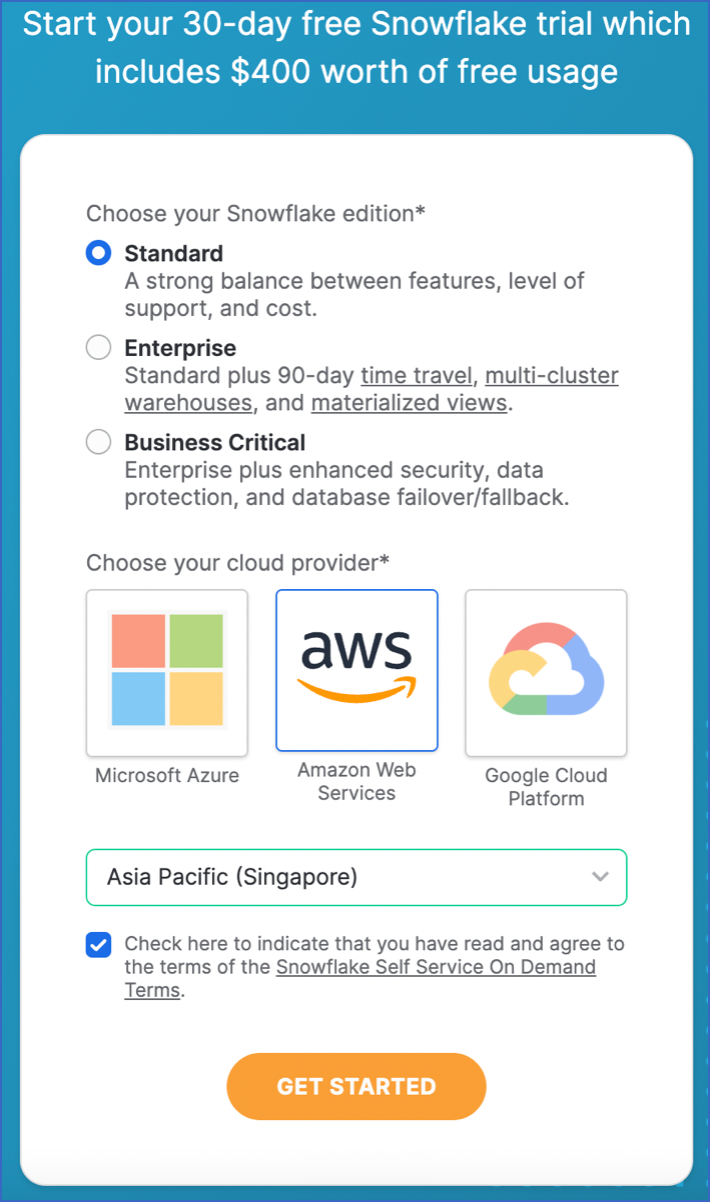

The Snowflake data warehouse may be hosted on any of the following cloud providers:

-

Amazon Web Services (AWS)

-

Google Cloud Platform (GCP)

-

Microsoft Azure (Azure)

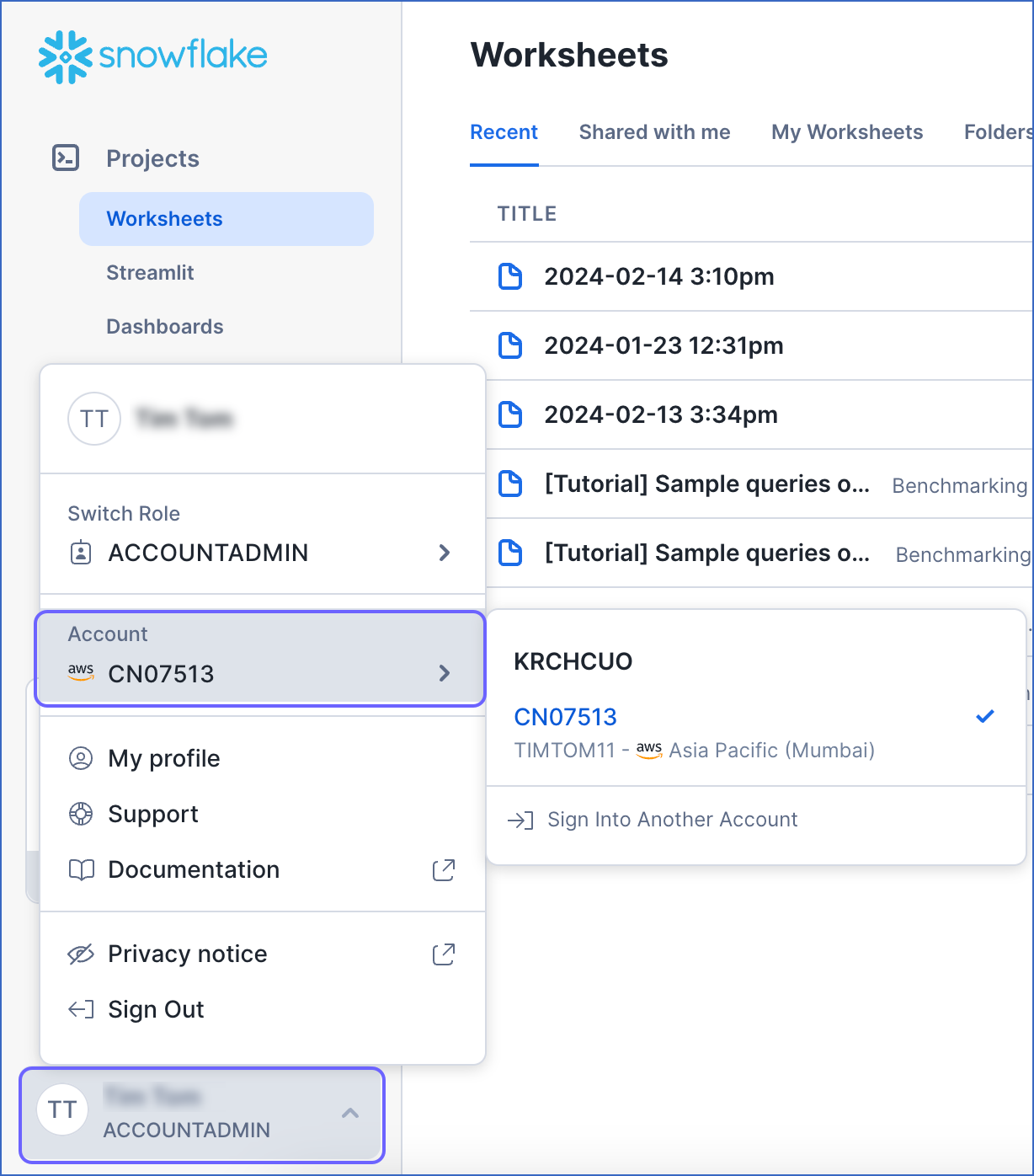

To connect your Snowflake instance to Hevo, you can either use a private link that directly connects to your cloud provider through a Virtual Private Cloud (VPC) or connect via a public network using the Snowflake account URL.

A private link enables communication and network traffic to remain exclusively within the cloud provider’s private network while maintaining direct and secure access across VPCs. It allows you to transfer data to Snowflake without going through the public internet or using proxies to connect Snowflake to your network. Note that even with a private link, the public endpoint is still accessible, and Hevo uses that to connect to your database cluster.

Please reach out to Hevo Support to retrieve the private link for your cloud provider.

Modifying Snowflake Destination Configuration in Edge

You can modify some settings of your Snowflake Edge Destination after its creation. However, any configuration changes will affect all the Pipelines using that Destination.

To modify the configuration of your Snowflake Destination in Edge:

-

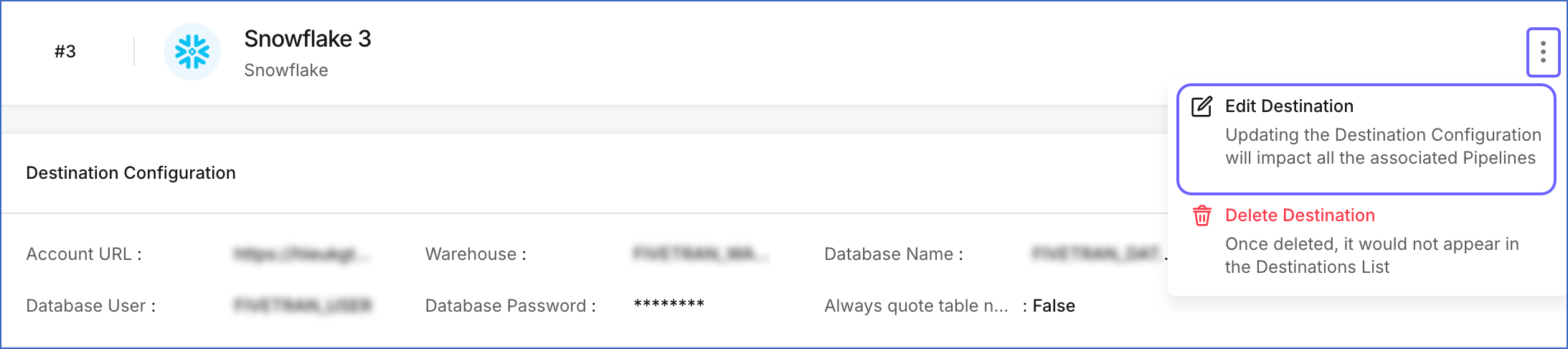

In the detailed view of your Destination, do one of the following:

-

Click the More (

) icon to access the Destination Actions menu, and then click Edit Destination.

) icon to access the Destination Actions menu, and then click Edit Destination.

-

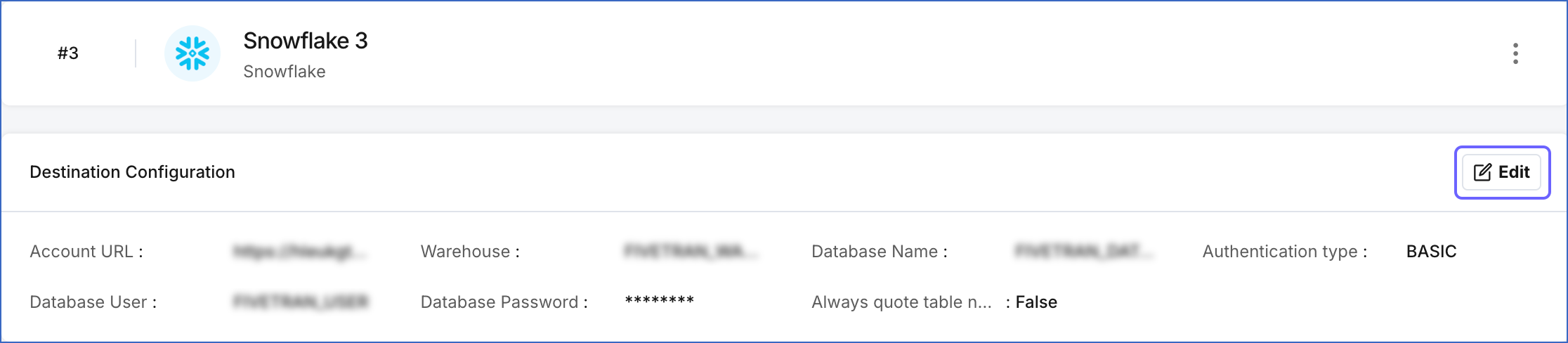

In the Destination Configuration section, click Edit.

-

-

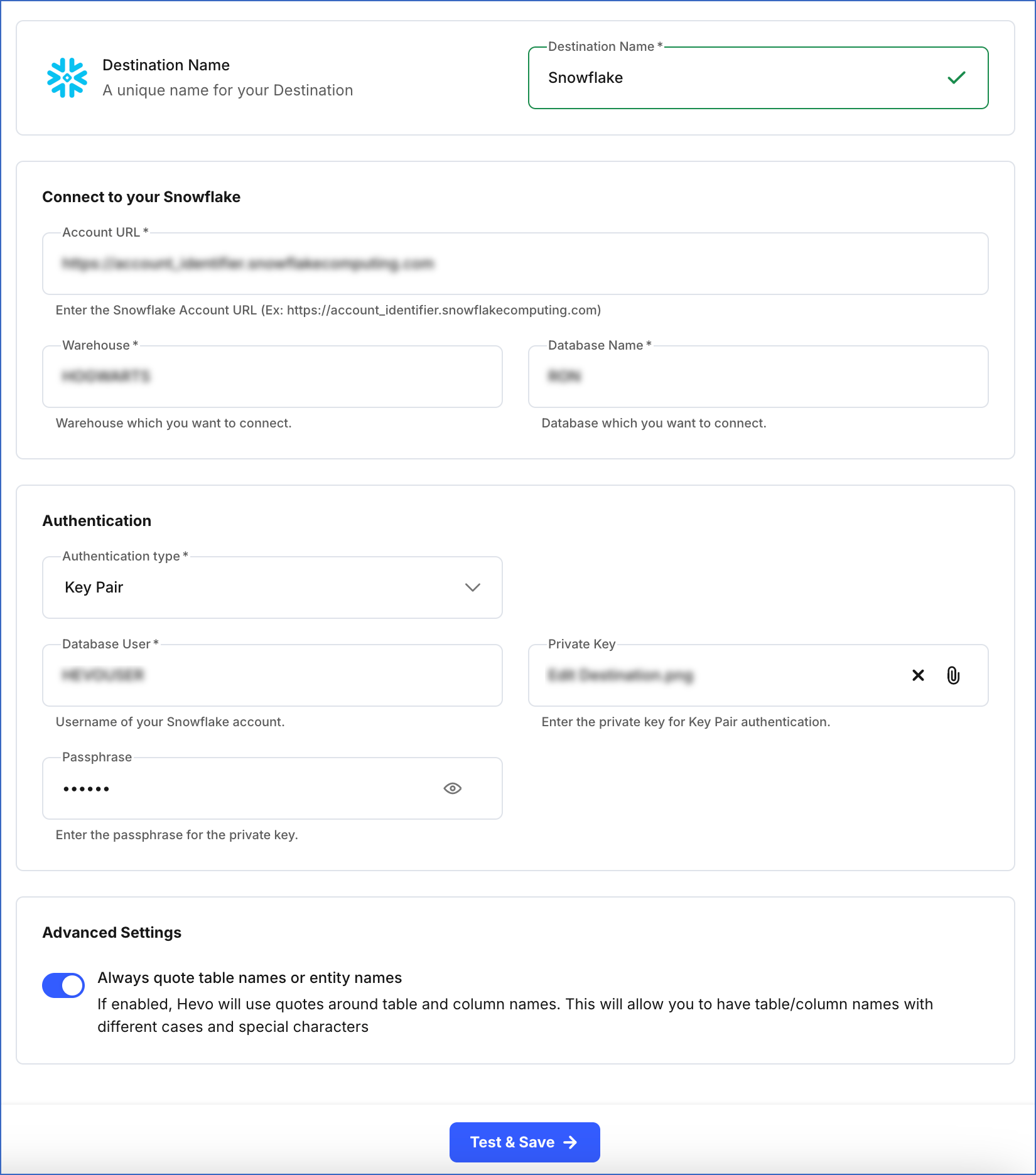

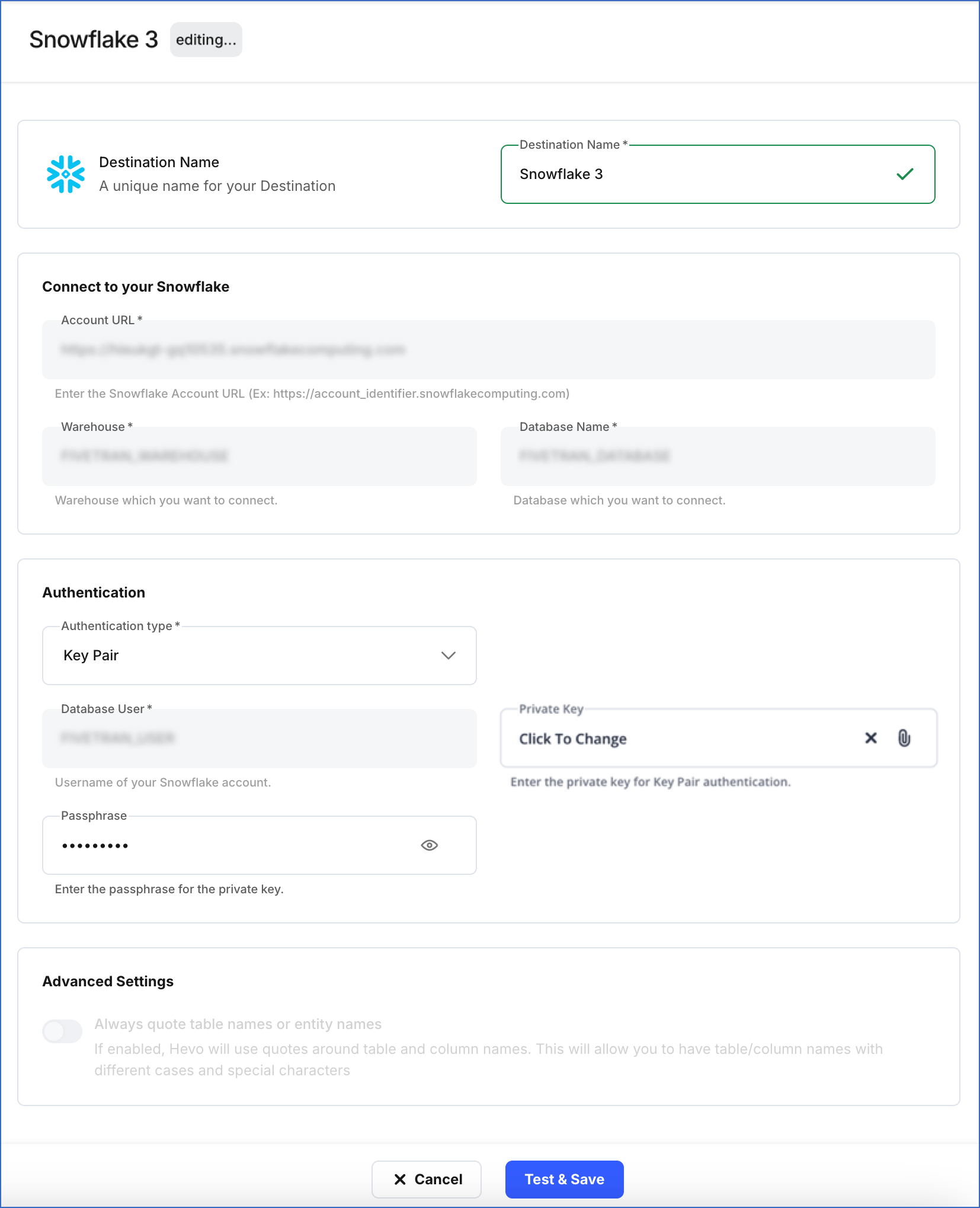

On the <Your Destination Name> editing page:

-

You can specify a new name for your Destination, not exceeding 255 characters.

-

In the Authentication section, you can modify your Authentication type and update the necessary fields based on the selected type:

-

Key Pair:

-

Private Key: Click the attach (

) icon to upload your encrypted or non-encrypted private key file. As the database user configured in your Destination cannot be changed, ensure that the public key corresponding to the uploaded private key is assigned to it.

) icon to upload your encrypted or non-encrypted private key file. As the database user configured in your Destination cannot be changed, ensure that the public key corresponding to the uploaded private key is assigned to it. -

Passphrase: Click Change to clear the field. If you uploaded an encrypted private key, provide the password used to generate it; otherwise, leave the field blank.

-

-

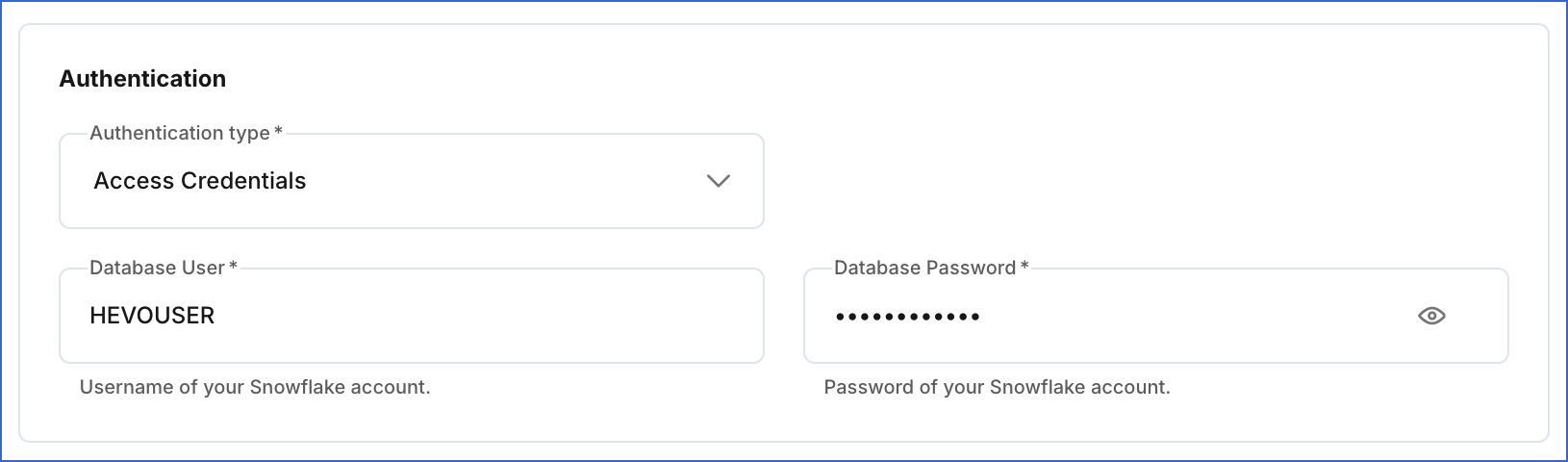

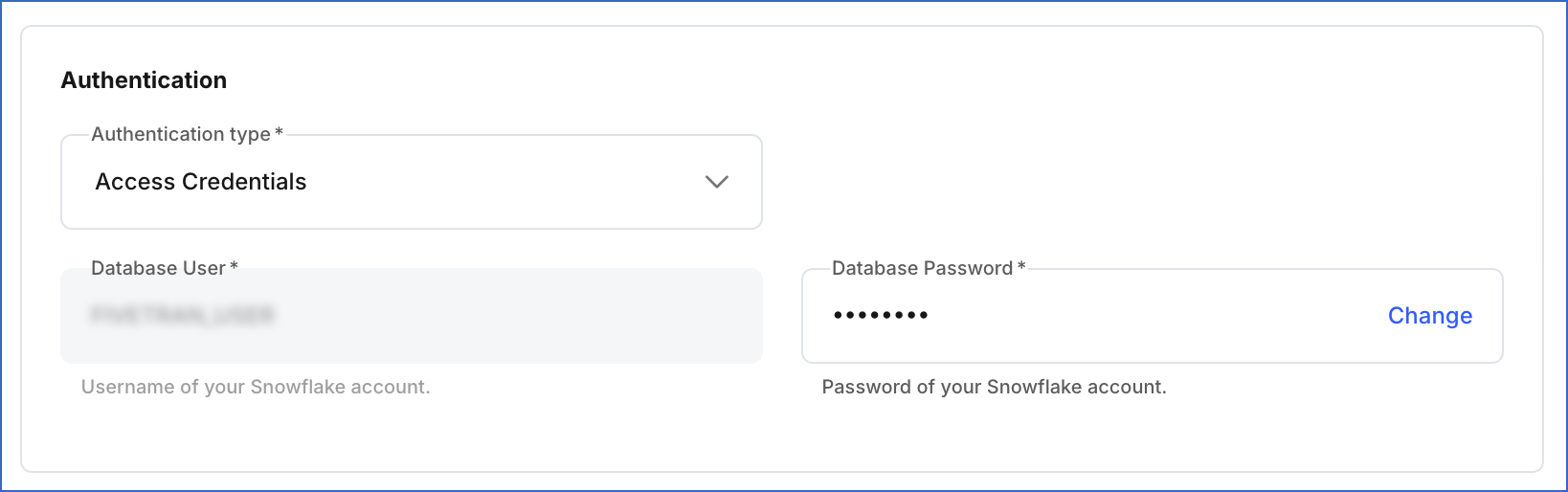

Access Credentials:

- Database Password: Click Change to update the password for the user configured in your Destination.

-

-

-

Click Test & Save to check the connection to your Snowflake Destination and then save the modified configuration.

Data Type Evolution in Snowflake Destinations

Hevo has a standardized data system that defines unified internal data types, referred to as Hevo data types. During the data ingestion phase, the Source data types are mapped to the Hevo data types, which are then transformed into the Destination-specific data types during the data loading phase. A mapping is then generated to evolve the schema of the Destination tables.

The following image illustrates the data type hierarchy applied to Snowflake Destination tables:

Data Type Mapping

The following table shows the mapping between Hevo data types and Snowflake data types:

| Hevo Data Type | Snowflake Data Type |

|---|---|

| BOOLEAN | BOOLEAN |

| BYTEARRAY | BINARY |

| - BYTE - SHORT - INTEGER - LONG |

NUMBER(38,0) |

| DATE | DATE |

| DATETIME | TIMESTAMP_NTZ |

| DATETIME_TZ | TIMESTAMP_TZ |

| DECIMAL | - NUMBER - VARCHAR |

| - FLOAT - DOUBLE |

FLOAT |

| VARCHAR | VARCHAR |

| - JSON - XML |

VARIANT |

| TIME | TIME |

| TIMETZ | VARCHAR |

Handling the Decimal data type

For Snowflake Destinations, Hevo maps DECIMAL data values with a fixed precision (P) and scale (S) to the NUMBER (NUMERIC) or VARCHAR data types. This mapping is decided based on the number of significant digits (P) in the numeric value and the number of digits in the numeric value to the right of the decimal point (S). Refer to the table below to understand the mapping:

| Precision and Scale of the Decimal Data Value | Snowflake Data Type |

|---|---|

| Precision: >0 and <= 38 Scale: > 0 and <= 37 |

NUMBER |

For precision and scale values other than those mentioned in the table above, Hevo maps the DECIMAL data type to a VARCHAR data type.

Read Numeric Types to know more about the data types, their range, and the literal representation Snowflake uses to represent various numeric values.

Handling Time and Timestamp data types

Snowflake supports fractional seconds up to 9 digits of precision (nanoseconds) for both TIME and TIMESTAMP data types. Hevo truncates any digits beyond this limit. For example, a Source value 12:00:00.1234567890 will be stored as 12:00:00.123456789.

Handling of Unsupported Data Types

Hevo does not allow the direct mapping of a Source data type to any of the following Snowflake data types:

-

ARRAY

-

STRUCT

-

Any other data type not listed in the table above.

Hence, if the Source object is mapped to an existing Snowflake table with columns of unsupported data types, it may become inconsistent. To prevent any inconsistencies during schema evolution, Hevo maps all unsupported data types to the VARCHAR data type in Snowflake.

Destination Considerations

-

Snowflake converts any unquoted Source table and column names to uppercase while mapping to the Destination table. For example, the Source table, Table namE 05, is converted to TABLE_NAME_05. The same conventions apply to column names.

However, if you have enabled the Always quote table names or entity names option while configuring your Snowflake Destination, your Source table and column names are preserved. For example, the Source table, ‘Table namE 05’ is created as “Table namE 05” in Snowflake.

Limitations

-

Hevo replicates a maximum of 4096 columns to each Snowflake table, of which six are Hevo-reserved metadata columns used during data replication. Therefore, your Pipeline can replicate up to 4090 (4096-6) columns for each table.

-

If a Source object has a column value exceeding 16 MB, Hevo marks the Events as failed during ingestion, as Snowflake allows a maximum column value size of 16 MB.