Snowflake offers a cloud-based data storage and analytics service, generally termed as data warehouse-as-a-service. Companies can use it to store and analyze data using cloud-based hardware and software.

Snowflake provides you one data warehouse automatically when you create an account. Each data warehouse can further have one or more databases, although this is not mandatory.

The data from your Pipeline is staged in Hevo’s S3 bucket before being finally loaded to your Snowflake warehouse.

The Snowflake data warehouse may be hosted on any of the following cloud providers:

To connect your Snowflake instance to Hevo, you can either use a private link which directly connects to your cloud provider through Virtual Private Cloud (VPC) or connect via a public network using your Snowflake account URL.

A private link enables communication and network traffic to remain exclusively within the cloud provider’s private network while maintaining direct and secure access across VPCs. It allows you to transfer data to Snowflake without going through the public internet or using proxies to connect Snowflake to your network. Note that even with a private link, the public endpoint is still accessible and Hevo uses that to connect to your database cluster.

Please reach out to Hevo Support to retrieve the private link for your cloud provider.

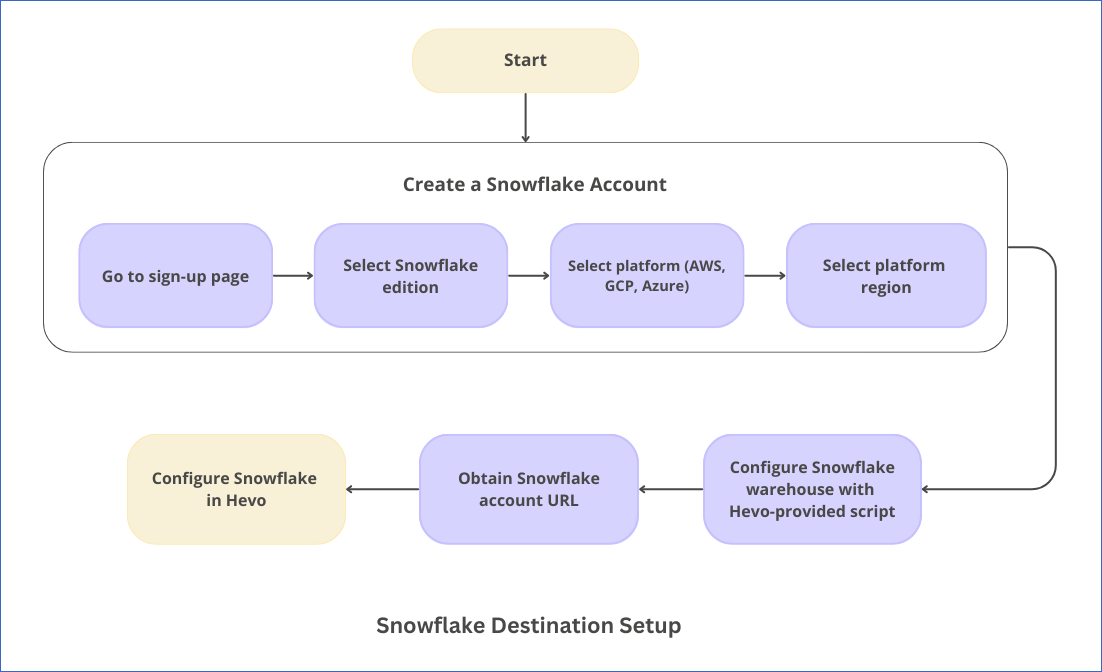

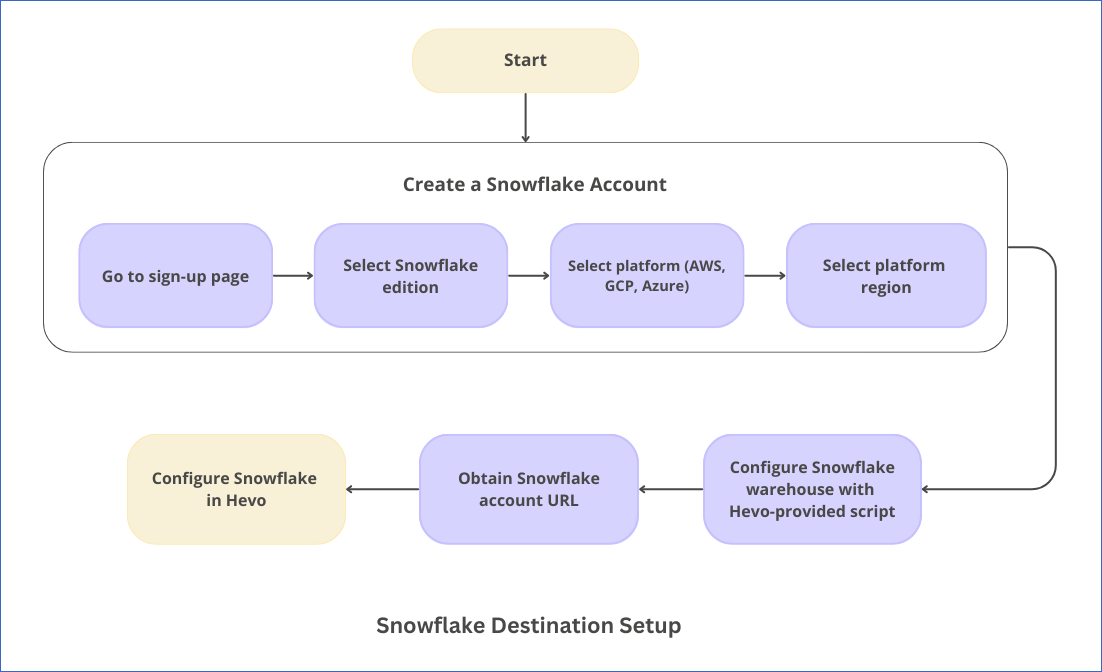

The following image illustrates the key steps that you need to complete to configure Snowflake as a Destination in Hevo:

Prerequisites

Refer to section, Create and Configure your Snowflake Warehouse to create a Snowflake warehouse with adequate permissions for Hevo to access your data.

Perform the following steps to configure your Snowflake Destination:

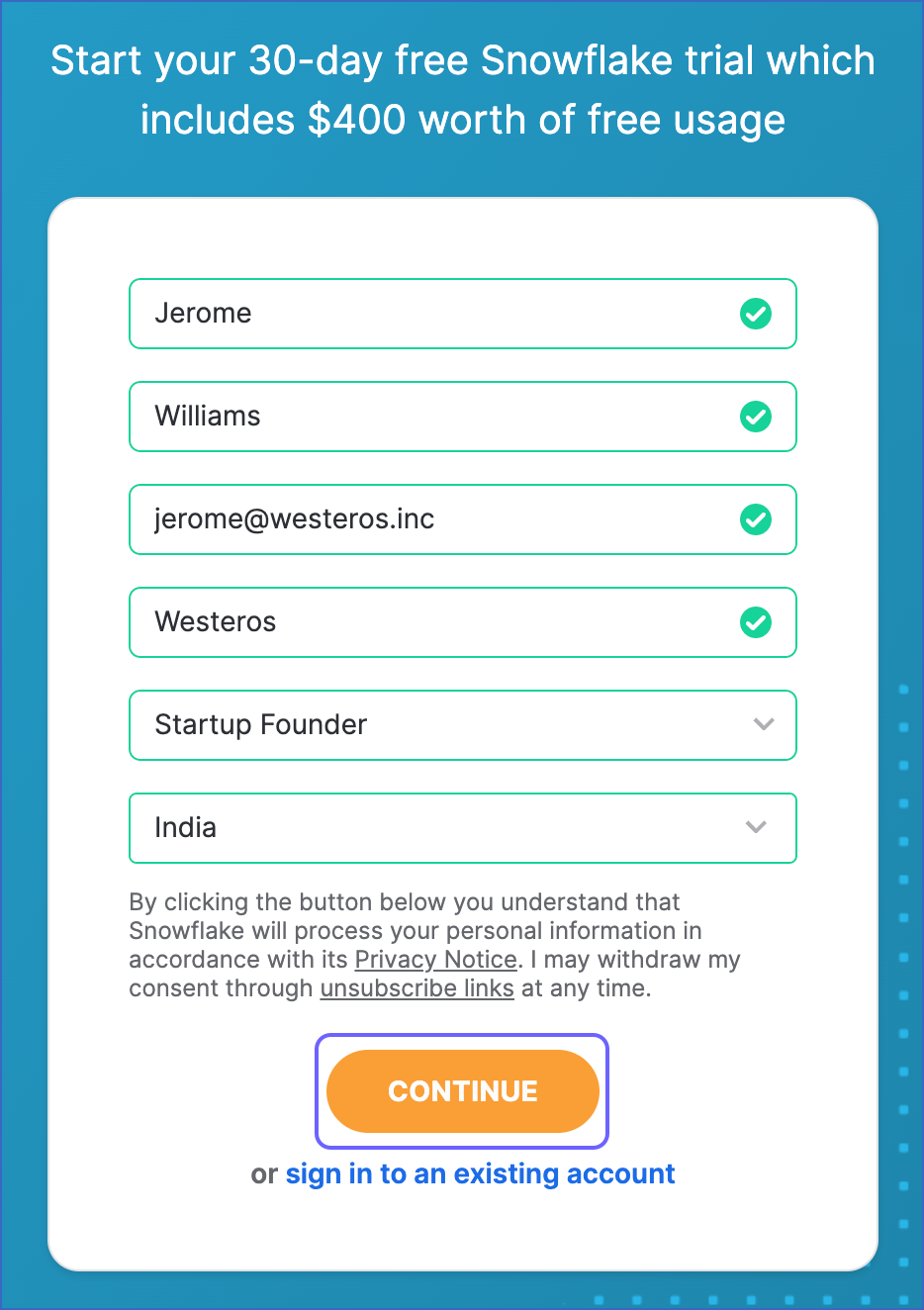

(Optional) Create a Snowflake Account

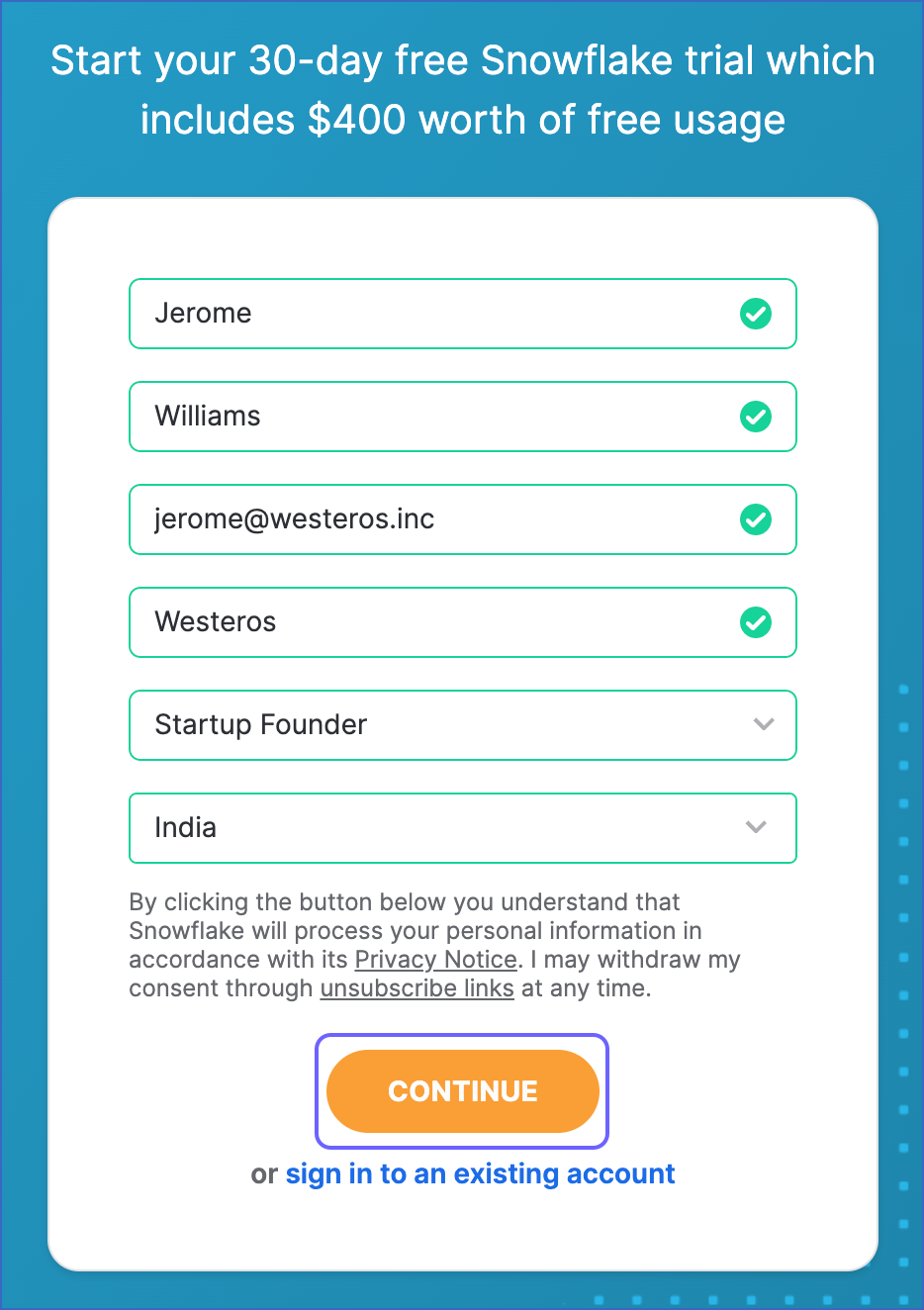

When you sign up for a Snowflake account, you get 30 days of free access with $400 credits. Beyond this limit, usage of the account is chargeable. The free trial starts from the day you activate the account. If you consume the credits before 30 days, the free trial ends and subsequent usage becomes chargeable. You can still log in to your account, however, you cannot use any features, such as running a virtual warehouse, loading data, or performing queries, until you upgrade your account or add more credits.

Perform the following steps to create a Snowflake account:

-

Go to https://signup.snowflake.com/.

-

On the Sign up page, specify the following and click CONTINUE:

-

First Name and Last Name: The first and last name of the account user.

-

Company Email: A valid email address that can be used to manage the Snowflake account.

-

Company Name: The name of your organization.

-

Role: The account user’s role in the organization.

-

Country: Your organization’s country or region.

-

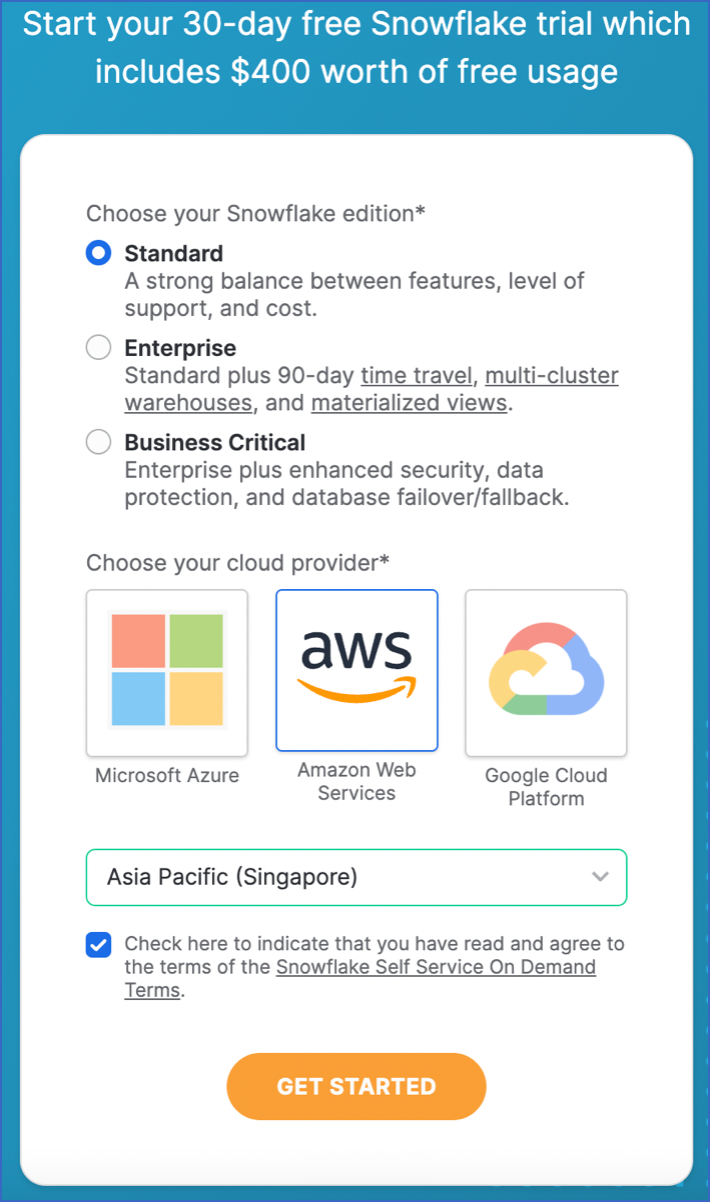

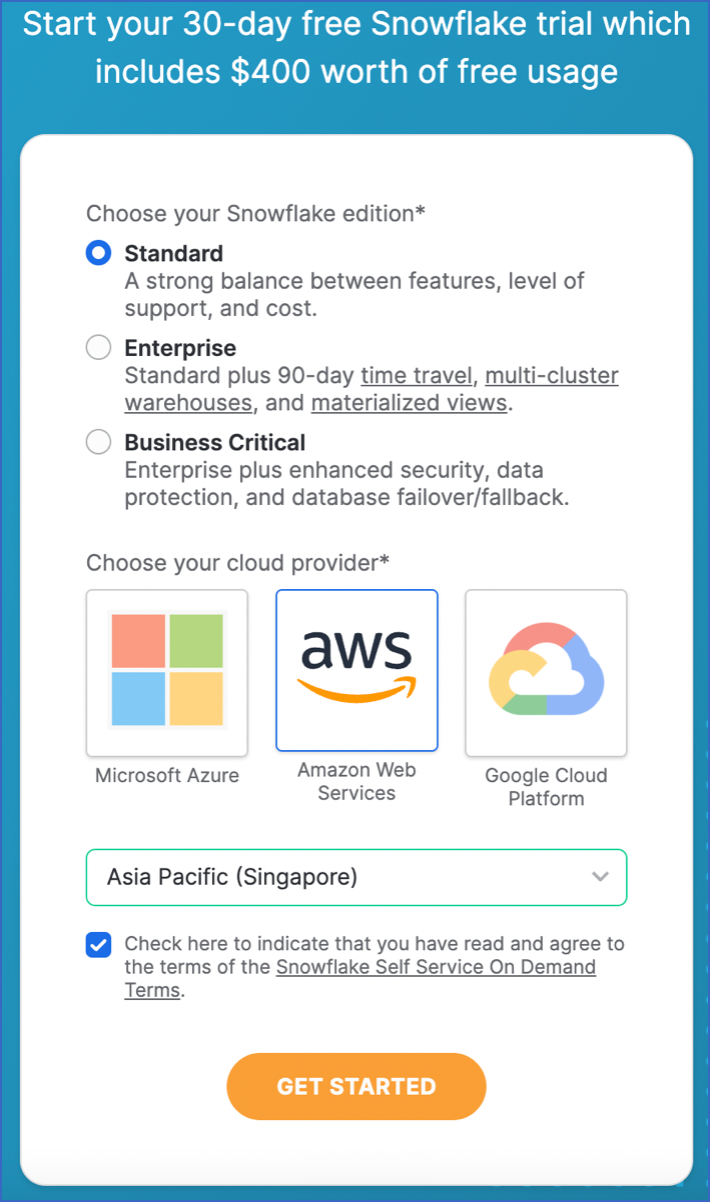

Select the Snowflake edition you want to use.

Note: You can choose the edition that meets your organization’s needs. Read Snowflake Editions to know more about the different editions available.

-

Select one of the following cloud platforms to host your Snowflake account:

- Amazon Web Services (AWS)

- Google Cloud Platform (GCP)

- Microsoft Azure (Azure)

Read Supported Cloud Platforms to know more about the details and pricing of each cloud platform.

-

Select the region for your cloud platform. In each platform, Snowflake provides one or more regions where the account can be provisioned.

-

Click GET STARTED.

An email to activate your account is sent to your registered email address. Click the link in the email to activate and sign in to your Snowflake account.

Hevo provides you with a ready-to-use script to configure the Snowflake warehouse you intend to use as a Destination.

Follow these steps to run the script:

-

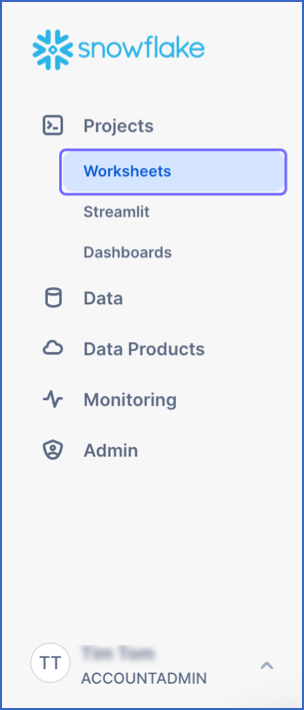

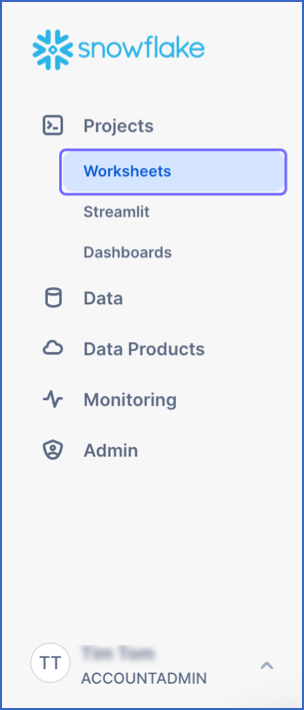

Log in to your Snowflake account.

-

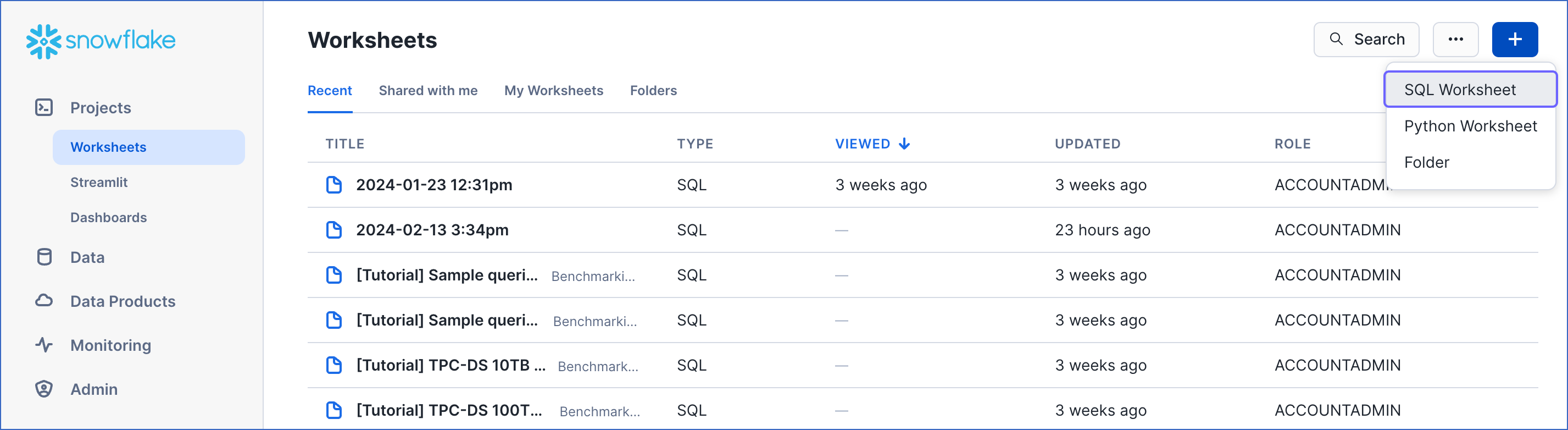

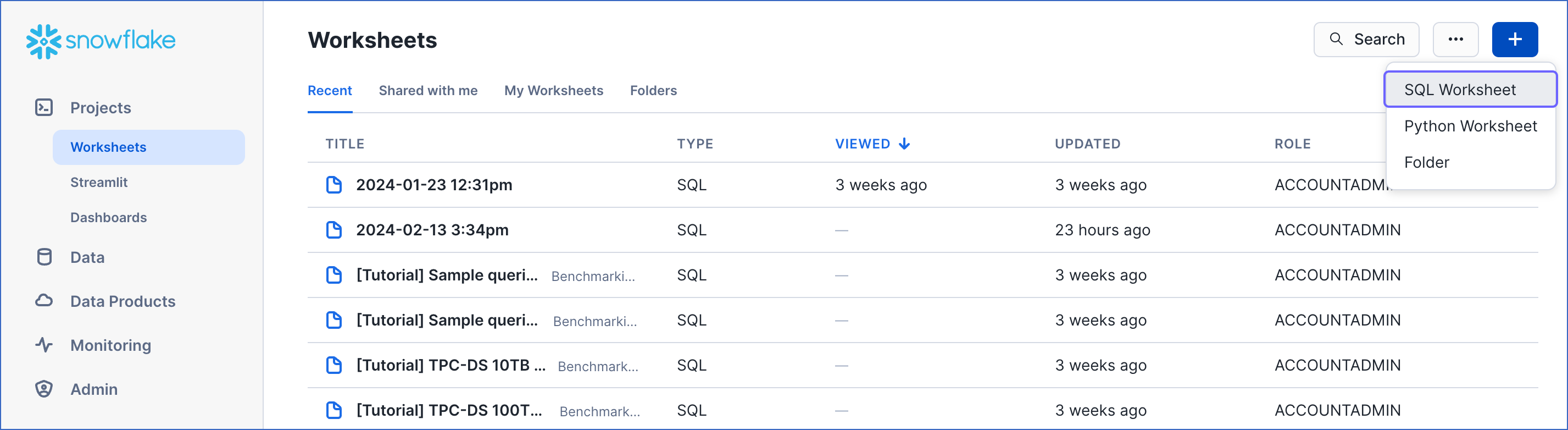

In the left navigation pane, under Projects, click Worksheets.

-

On the top right corner of the Worksheets tab, click the + icon and select SQL Worksheet from the drop-down to create a new worksheet.

-

Paste the following script in the worksheet. The script creates a new role for Hevo in your Snowflake Destination. Keeping your privacy in mind, the script grants only the essential permissions required by Hevo to load the data in your Destination.

-- create variables for user / password / role / warehouse / database (needs to be uppercase for objects)

set role_name = 'HEVO'; -- Replace "HEVO" with your role name

set user_name = 'HARRY_POTTER'; -- Replace "HARRY_POTTER" with your username

set user_password = 'Gryffindor'; -- Replace "Gryffindor" with the user password

set warehouse_name = 'HOGWARTS'; -- Replace "HOGWARTS" with the name of your warehouse

set database_name = 'RON'; -- Replace "RON" with the name of your database

set schemaName = 'DARK_ARTS'; -- Replace "DARK_ARTS" with the database schema name

set db_schema = concat($database_name, '.', $schemaName);

begin;

-- change role to securityadmin for user / role steps

use role securityadmin;

-- create role for HEVO

create

role if not exists identifier($role_name);

grant role identifier($role_name) to role SYSADMIN;

-- create a user for HEVO

create user if not exists identifier($user_name)

password = $user_password

default_role = $role_name

default_warehouse = $warehouse_name;

-- Grant access to the user

grant role identifier($role_name) to user identifier($user_name);

-- change role to sysadmin for warehouse / database steps

use role sysadmin;

-- create a warehouse for HEVO, if it does not exist

create

warehouse if not exists identifier($warehouse_name)

warehouse_size = xsmall

warehouse_type = standard

auto_suspend = 60

auto_resume = true

initially_suspended = true;

-- create database for HEVO

create database if not exists identifier($database_name);

-- grant HEVO role access to warehouse

grant USAGE

on warehouse identifier($warehouse_name)

to role identifier($role_name);

-- grant HEVO access to current schemas

use role accountadmin;

grant CREATE SCHEMA, MONITOR, USAGE, MODIFY

on database identifier($database_name)

to role identifier($role_name);

-- grant Hevo access to future schemas

use role accountadmin;

grant SELECT on future tables in database identifier($database_name) to role identifier($role_name);

grant MONITOR, USAGE, MODIFY on future schemas in database identifier($database_name) to role identifier($role_name);

use role accountadmin;

CREATE SCHEMA IF not exists identifier($db_schema);

GRANT USAGE, MONITOR, CREATE TABLE, CREATE EXTERNAL TABLE, MODIFY ON SCHEMA identifier($db_schema) TO ROLE identifier($role_name);

commit;

-

Replace the sample values provided in lines 2-7 of the script with your own. You can specify a new warehouse, role, and database name to create these now, or use pre-existing ones to load data into. These are the credentials that you will be using to connect your warehouse to Hevo.

Note: The values for role_name, user_name, warehouse_name, database_name and schemaName must be in upper case.

-

Press CMD + A (Mac) or CTRL + A (Windows) inside the worksheet area to select the script.

-

Press CMD+return (Mac) or CTRL + Enter (Windows) to run the script.

-

Once the script runs successfully, you can use the credentials from lines 2-7 of the script to connect your Snowflake warehouse to Hevo.

Obtain your Snowflake Account URL

The organization name and account name are visible in your Snowflake web interface URL.

For most accounts, the URL looks like https://<orgname>-<account_name>.snowflakecomputing.com.

For example, https://hevo-westeros.snowflakecomputing.com. Here, hevo is the organization name and westeros is your account name.

Perform the following steps to obtain your Snowflake account URL:

-

Log in to your Snowflake instance.

-

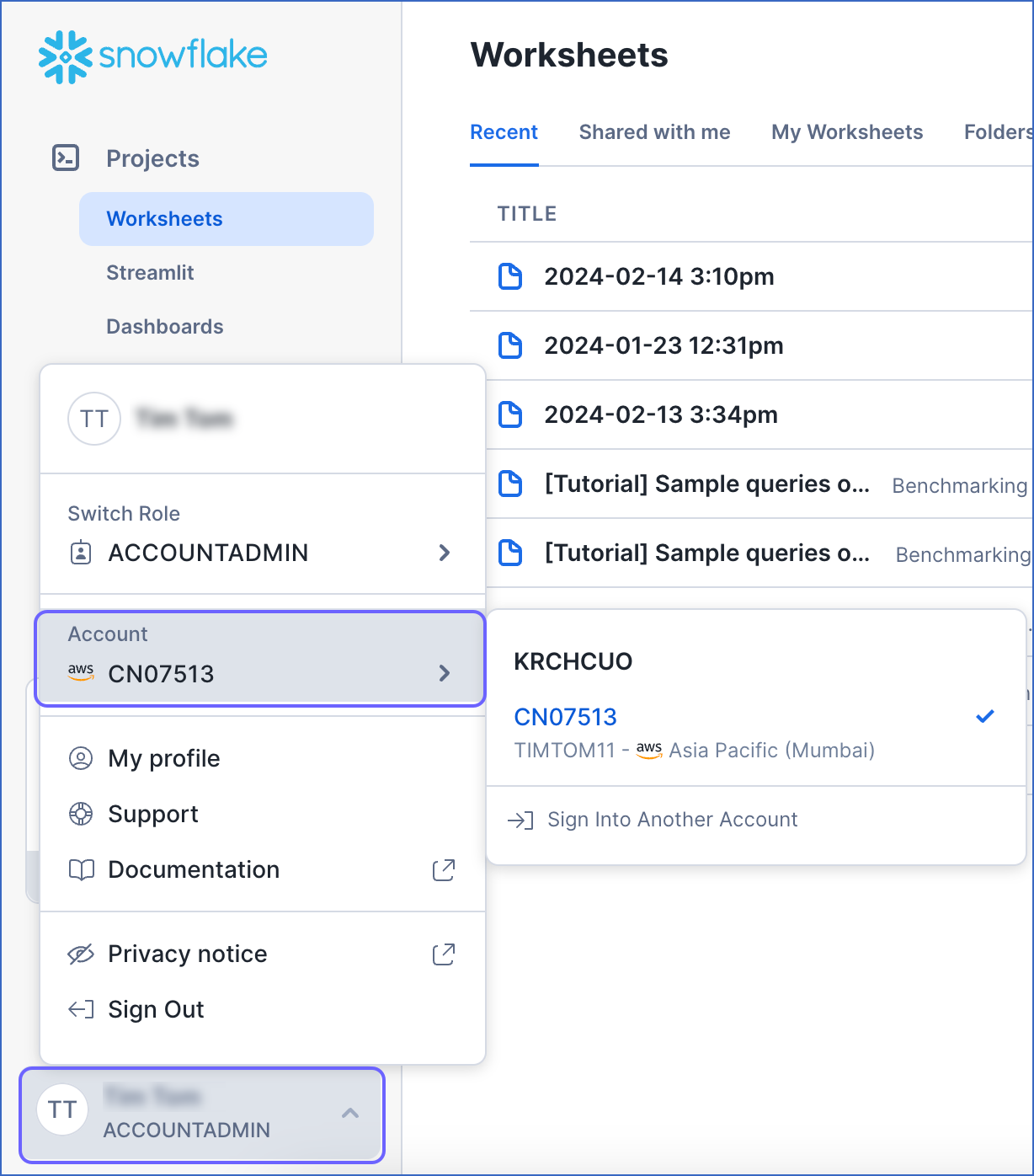

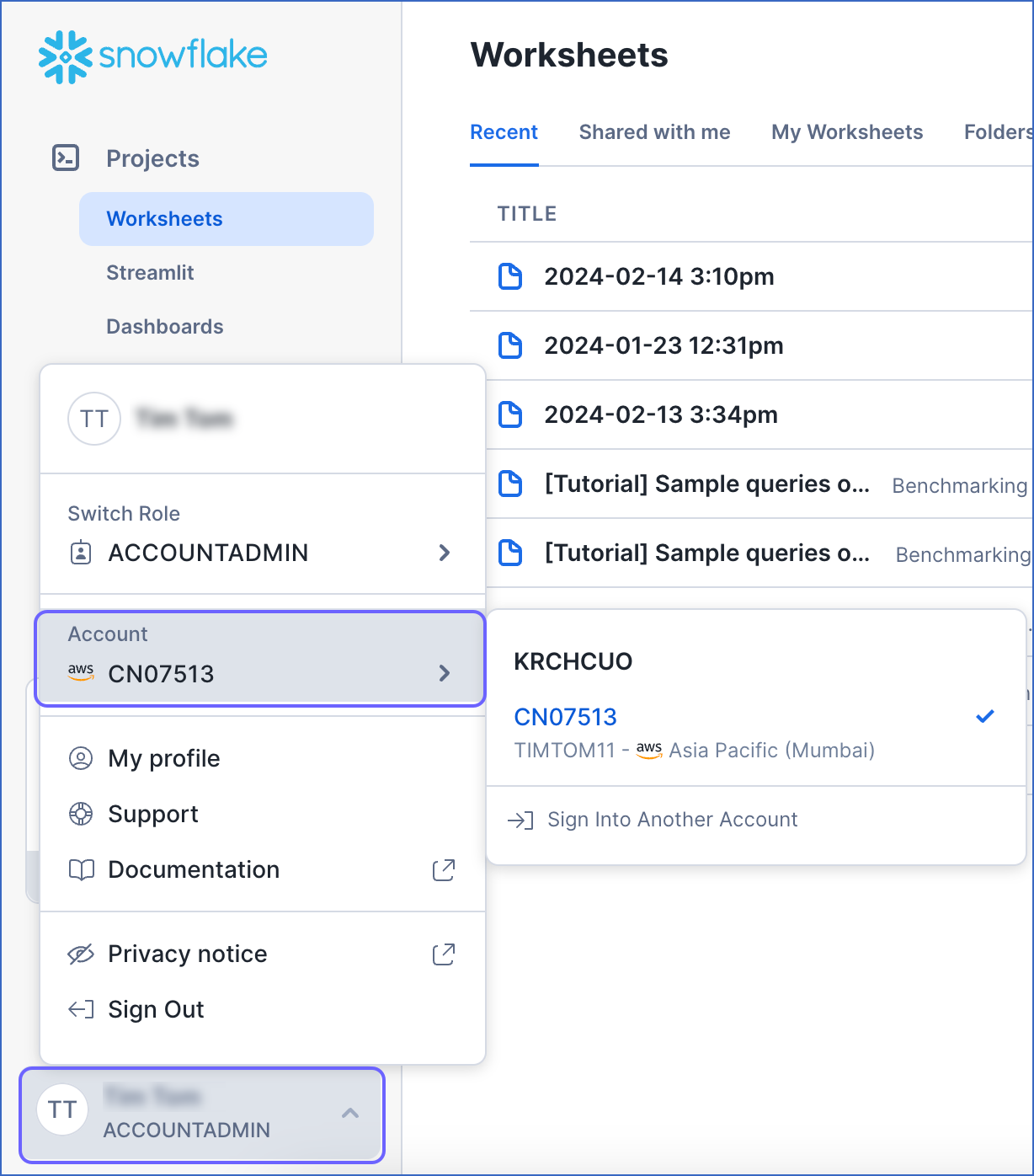

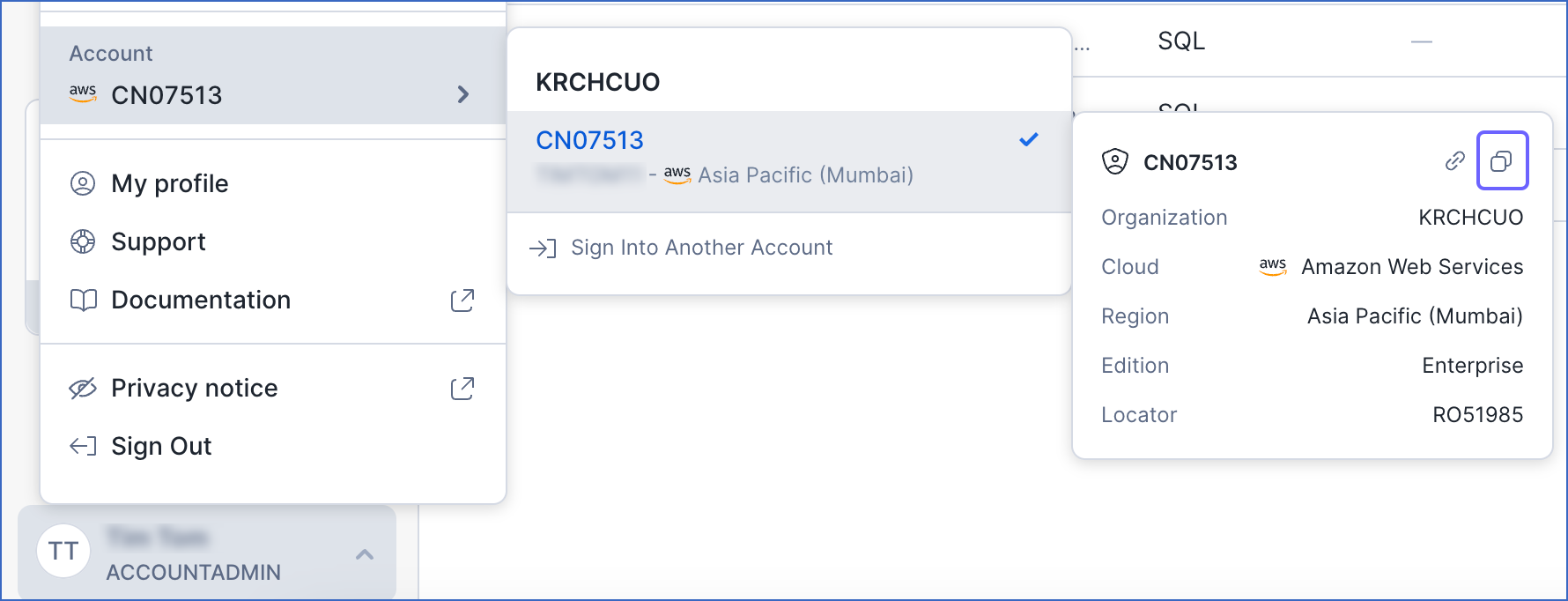

Navigate to the bottom of the left navigation pane, click the account selector, and then hover over the Account section.

-

In the pop-up, locate the account whose URL you want to obtain and hover over it.

-

In the account details pop-up dialog, click the Copy icon to copy the account identifier, and save it securely.

The account identifier is provided in the format <orgname>.<account_name>. To convert it to the account URL, substitute the values of orgname and account_name in the format https://<orgname>-<account_name>.snowflakecomputing.com.

For example, if the account identifier is KRCHCUO.CN07513, the account URL is https://krchcuo-cn07513.snowflakecomputing.com.

Use this URL while configuring your Destination.

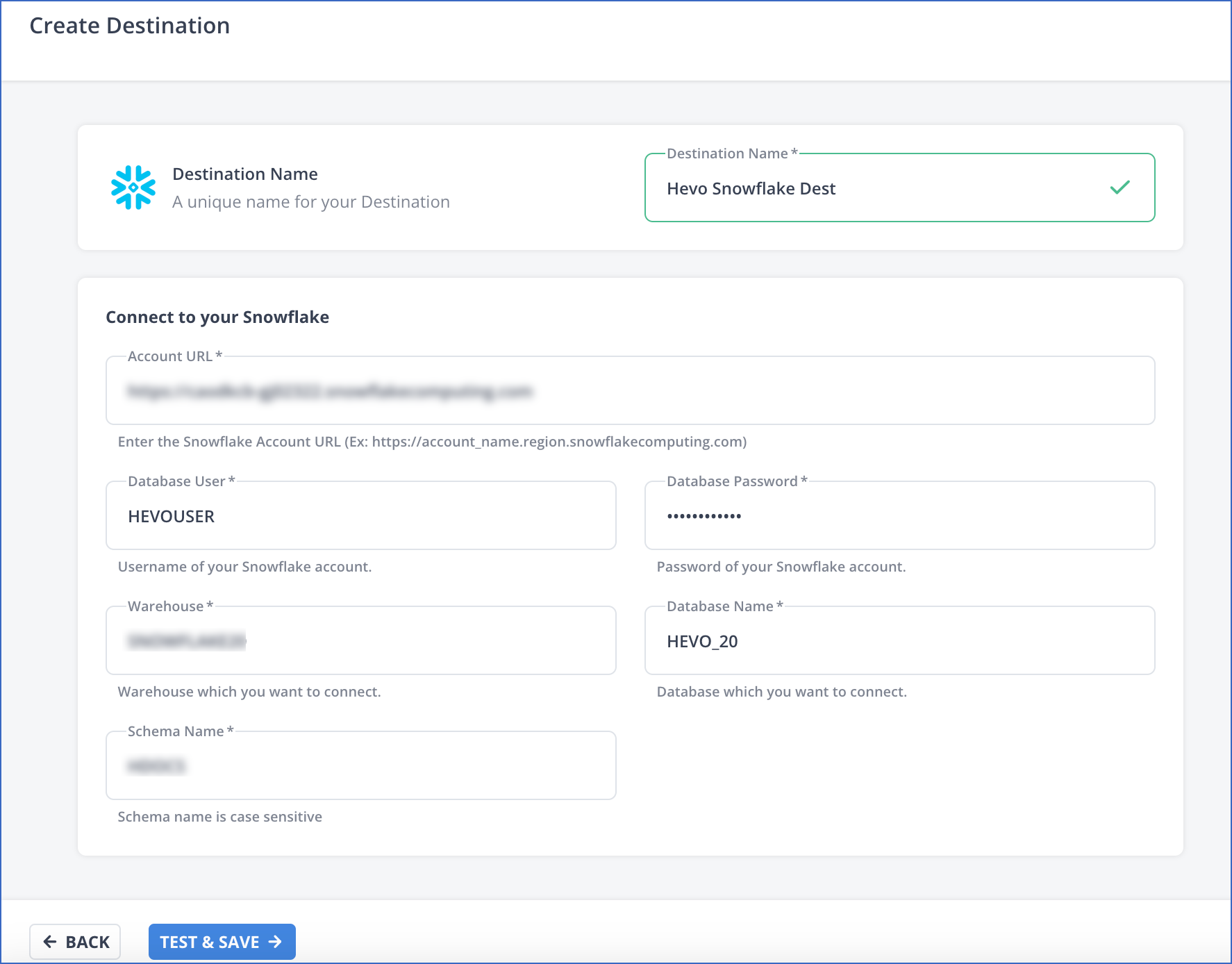

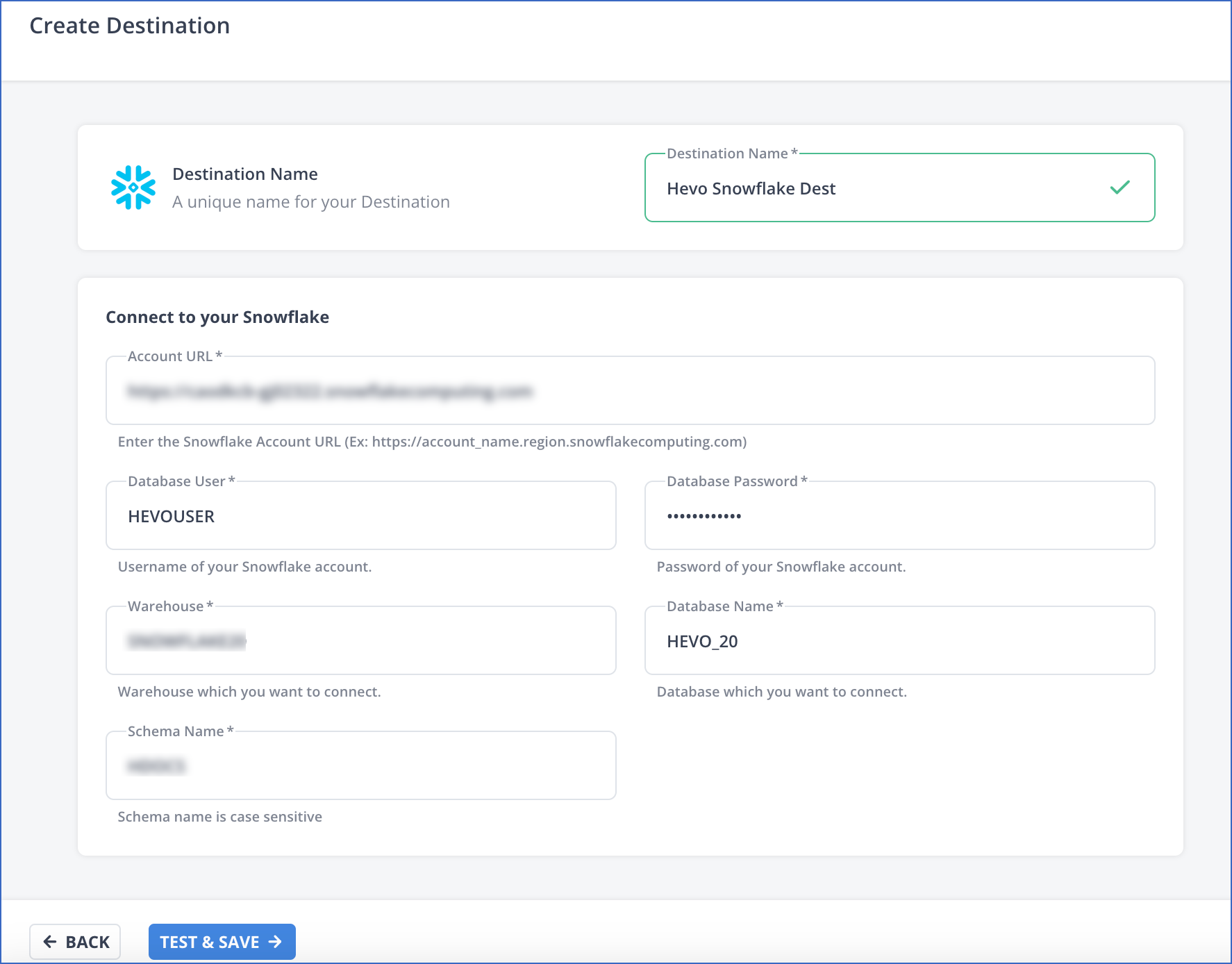

Perform the following steps to configure Snowflake as a Destination in Hevo:

-

Click DESTINATIONS in the Navigation Bar.

-

Click the Edge tab in the Destinations List View and click + CREATE EDGE DESTINATION.

-

On the Create Destination page, click Snowflake.

-

In the screen that appears, specify the following:

-

Destination Name: A unique name for your Destination.

-

Account URL: The Snowflake account URL that you retrieved in the Obtain your Snowflake Account URL step of the Getting Started section.

-

Database User: The Hevo user that you created in the Create and Configure your Snowflake Warehouse step of the Getting Started section. This user has a non-administrative role in the Snowflake database.

-

Database Password: The password of your database user.

-

Warehouse: The Snowflake warehouse associated with your database, where the data is managed.

-

Database Name: The name of the database where the data is to be loaded.

-

Schema Name: The name of the schema in the database where the data is to be loaded.

Note: All the field values are case-sensitive.

-

Click TEST & SAVE.

Data Type Mapping

Hevo maps a Source data type internally to a unified data type, referred to as the Hevo Data Type in the table below. This data type is used to represent the Source data from all supported data types in a lossless manner. The Hevo data types are then mapped to the corresponding data types that are supported in each Destination.

| Hevo Data Type |

Snowflake Data Type |

| ARRAY |

ARRAY |

| BOOLEAN |

BOOLEAN |

| BYTEARRAY |

BINARY |

| BYTE |

BYTEINT |

| DATE |

DATE |

- DATETIME

- TIMESTAMP |

TIMESTAMP_NTZ |

| DECIMAL |

NUMBER |

| DOUBLE |

DOUBLE |

| FLOAT |

FLOAT |

| INTEGER |

INTEGER |

| JSON |

VARIANT |

| LONG |

BIGINT |

| SHORT |

SMALLINT |

| TIME |

TIME |

- TIMESTAMPTZ

- TIMETZ

- ZONEDDATETIME |

TIMESTAMP_TZ |

| VARCHAR |

VARCHAR |

Destination Considerations

- Snowflake converts the Source table and column names to uppercase while mapping to the Destination table. For example, the Source table, Table_namE_05, is converted to TABLE_NAME_05. The same conventions apply to column names.

Limitations

- Hevo replicates a maximum of 4096 columns to each Snowflake table, of which six are Hevo-reserved metadata columns used during data replication. Therefore, your Pipeline can replicate up to 4090 (4096-6) columns for each table.