Release Version 2.31

The content on this site may have changed or moved since you last viewed it. As a result, some of your bookmarks may become obsolete. Therefore, we recommend accessing the latest content via the Hevo Docs website.

This release note also includes the fixes provided in all the minor releases since 2.30.

To know the complete list of features available for early adoption before these are made generally available to all customers, read our Early Access page.

In this Release

New and Changed Features

Destinations

-

Enhanced Configuration for Databricks Destinations

-

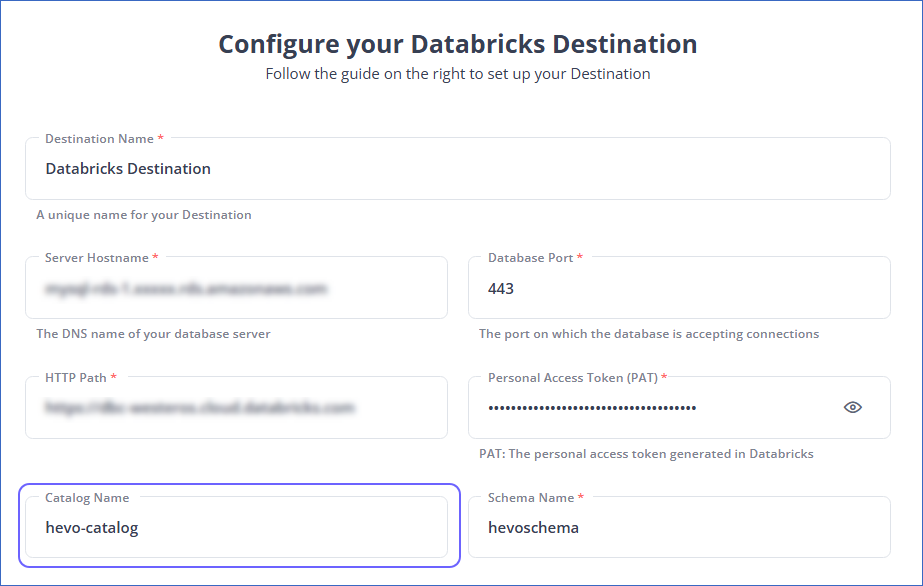

Enhanced User Interface for Configuring Databricks as a Destination (Added in Release 2.30.1)

Introduced the Catalog Name field in the configuration page, allowing you to specify the catalog and schema names separately. Earlier, if your catalog was other than hive_metastore, you had to provide the schema name along with the catalog name in the Schema Name field on the Configuration page. This change makes the setup process more intuitive.

-

Support for Unity Catalog with Databricks Partner Connect

Hevo now supports Unity Catalog when configuring Databricks as a Destination through Databricks Partner Connect. Earlier, you had to use hive_metastore as the default workspace catalog. With this update, you can select Unity Catalog as your workspace catalog, improving compatibility for Databricks Destinations.

-

Sources

-

Support for Additional Fields in the Intercom Conversations Object (Added in Release 2.30.2)

-

Hevo now supports replicating new fields in the Conversations object. These fields are:

Name Description custom_attributesStores custom metadata about a conversation, such as tags and additional properties. titleRepresents the subject of a conversation. admin_assignee_idDetails of the admin assigned to the conversation. team_assignee_idDetails of the team responsible for the conversation. This enhancement allows for more comprehensive data replication, enabling better tracking and analysis of conversation-related data in your Destination. To enable the replication of these new fields in your new and existing Pipelines, contact Hevo Support.

-

-

Support for Tiers Field in Stripe Price Object

- Added support for replicating data from the tiers field in the Stripe Schema v1 Price object. This field contains information about flexible volume-based pricing, where prices vary based on predefined usage ranges. This field is included only when the billing_scheme field is set to tiered in Stripe and is represented as an array of objects in the Schema Mapper.

-

Updated Default Ingestion Frequency for YouTube Analytics

- The default ingestion frequency for Pipelines created with YouTube Analytics Source has been updated to 12 hours. This change helps manage resources effectively when high volumes of data are being ingested from this Source.

Fixes and Improvements

Performance

-

Handling Data Loading Issue for Snowflake Destinations (Fixed in Release 2.30.1)

-

Fixed an issue that affected data loading in Snowflake Destinations when frequent schema changes were made to the Destination table, causing a delay in data availability or throughput. Now, as the schema changes are not propagated immediately, the data is segregated into files based on the changed and unchanged schema. The throughput issue was observed because the load job processed these files individually and in sequence, starting with the oldest file.

After the fix, whenever a load is scheduled, all available files are picked and grouped by their schema. Each group is then loaded in a separate transaction to your Snowflake Destination, thereby increasing the throughput for the Destination table.

-

Sources

-

Handling Incorrect Offset Management in BigCommerce Source (Fixed in Release 2.30.1)

-

Fixed an issue in the BigCommerce integration where Hevo was unable to ingest data from the Orders and Products objects during incremental loads. This problem occurred because the offset for these objects was incorrectly set to null if no records were ingested during a poll. This caused the system to fetch only records with a timestamp matching or later than the current time in the next poll, skipping records with earlier timestamps. If no records were present at the current time, the offset would remain null.

After the fix, the system correctly sets the offset to the timestamp of the last retrieved record in the previous poll. If no records were retrieved, the offset is set to the timestamp when the previous poll started. This ensures no records are skipped and all incremental data is ingested.

-

-

Handling Incremental Data Mismatch Issue in AppsFlyer (Fixed in Release 2.30.2)

-

Fixed an issue in the AppsFlyer integration where the API call did not fetch all incremental data from the Daily Reports objects. AppsFlyer updates this object every 24 hours and may have records that are modified after Hevo has moved the object’s offset. However, in the next Pipeline run, Hevo starts fetching data from the current offset. As a result, any record updated prior to this offset was missed, leading to a data mismatch between the Source and the Destination.

After the fix, the Daily Reports object is polled for data records that were updated in the past 48 hours, which may lead to increased Event consumption by your Pipeline and affect billing. If you observe a mismatch between the Source and Destination data for the Daily Reports object, contact Hevo Support to enable this fix for your new and existing Pipelines.

-

-

Handling Missing Records in Objects from NetSuite Analytics Source

-

Fixed an issue where the polling task did not fetch all the records during incremental data ingestion. This problem occurred because of a delay in the availability of records from the Source. To address the data availability issue, a 15-minute buffer has been introduced in the polling task. The task now starts fetching records from 15 minutes before the last modified timestamp. This action may lead to duplicate data in your Destination tables. To handle this, define a primary key for your Destination table, as Hevo performs deduplication using primary keys.

The fix applies to new and existing Pipelines created with NetSuite Analytics Source. By default, the buffer is set to 15 minutes. For custom buffer configurations, contact Hevo Support.

-

-

Improved Data Ingestion for the Events object in Stripe Source

- Improved the polling mechanism used for fetching data from the Events object to address data mismatches, excessive API calls, and Pipeline failures. The updated approach uses an efficient offset tracking system to ensure all records are captured in the correct order. The improvement applies to all new and existing Pipelines. It will be deployed to teams across all regions in a phased manner and does not require any action from you.

User Experience

-

Handling Data Ingestion Issues in Salesforce Bulk API V2 Source (Fixed in Release 2.30.3)

-

Fixed the following issues in the Salesforce Bulk API V2 integration:

-

Incorrect deferred ingestion: The Use Bulk API to query the object option appeared disabled in the user interface but continued to function as enabled. When disabled, this option prevents deferred ingestion while querying unsupported fields. With this fix, the system correctly applies the setting to disable Bulk API queries and prevent deferred ingestion. The fix applies to both new and existing Pipelines.

-

Historical load failure: Historical data loads were failing because Hevo repeatedly attempted to fetch data from a failed job in Salesforce. This issue occurred because the system did not reset the job ID of the failed job. Hevo tracks the job’s progress using this ID. With this fix, the system now resets the job ID correctly, allowing a new job to be created and ensuring historical data is ingested correctly. This fix applies to all new and existing Pipelines.

-

-

Documentation Updates

The following pages have been created, enhanced, or removed in Release 2.31: